TechBrothersIT is a blog and YouTube channel sharing real-world tutorials, interview questions, and examples on SQL Server (T-SQL, DBA), SSIS, SSRS, Azure Data Factory, GCP Cloud SQL, PySpark, ChatGPT, Microsoft Dynamics AX, Lifecycle Services, Windows Server, TFS, and KQL. Ideal for data engineers, DBAs, and developers seeking hands-on, step-by-step learning across Microsoft and cloud platforms.

Label

- Azure Data Factory Interview Question & Answers

- Azure Data Factory Tutorial Step by Step

- C# Scripts

- DWH INTERVIEW QUESTIONS

- Google Cloud SQL Tutorial

- Kusto Query Language (KQL) Tutorial

- MS Dynamics AX 2012 R2 Video Tutorial

- MariaDB Admin & Dev Tutorial

- MySQL / MariaDB Developer Tutorial Beginner to Advance

- MySQL DBA Tutorial Beginner to Advance

- PySpark Tutorial for Beginners and Advanced Users

- SQL SERVER DBA INTERVIEW QUESTIONS

- SQL SERVER DBA Video Tutorial

- SQL Server / TSQL Tutorial

- SQL Server 2016

- SQL Server High Availability on Azure Tutorial

- SQL Server Scripts

- SQL Server on Linux Tutorial

- SSIS INTERVIEW QUESTIONS

- SSIS Video Tutorial

- SSRS INTERVIEW QUESTIONS

- SSRS Video Tutorial

- TSQL INTERVIEW QUESTIONS

- Team Foundation Server 2013 Video Tutorial

- Team Foundation Server 2015 Video Tutorial

- Windows 10

- Windows Server 2012 R2 Installation Videos

Load Excel File with Multiple Sheets Dynamically by using Lookup Activity in Azure Data Factory

How to Load Data from Multiple XL Sheets to Azure SQL Tables in Azure Data Factory

Issue: How to Load Data from Multiple XL Sheets to Azure SQL Tables in Azure Data Factory.

How to Create a pipeline:

How to Deploy SSIS Project in Azure File Share and call SSIS Packages in Azure Data Factory Pipeline

Issue: How to Deploy SSIS Project in Azure File Share and call SSIS Packages in Azure Data Factory Pipeline.

How to Create an SSIS Package:

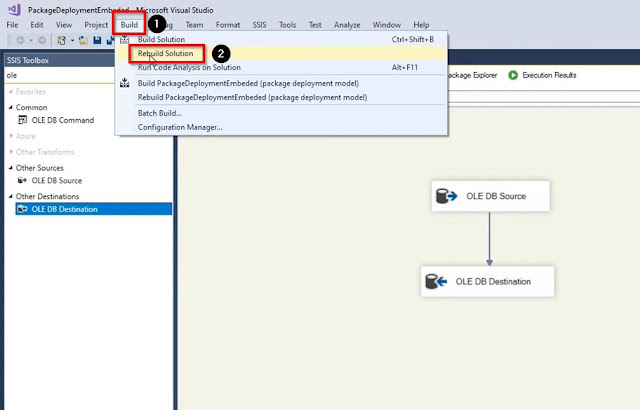

Next, bring the data flow task, then inside the dataflow task bring the OLEDB source and OLEDB destination and then configure both, and connect with the SQL server database.

Click on OLEDB source, in the connection manager click new, then provide the SQL Server name, select the authentication type, provide the username and password, provide the database name from where you will read the data, test the connection and then click on ok if everything goes right.

Once our OLEDB source is ready connect it with the OLEDB destination and click on OLEDB destination in the connection manager click on new, Provide the server name, select the authentication, provide the user name and password, select or enter a database name, test the connection and then click on ok