TechBrothersIT is a blog and YouTube channel sharing real-world tutorials, interview questions, and examples on SQL Server (T-SQL, DBA), SSIS, SSRS, Azure Data Factory, GCP Cloud SQL, PySpark, ChatGPT, Microsoft Dynamics AX, Lifecycle Services, Windows Server, TFS, and KQL. Ideal for data engineers, DBAs, and developers seeking hands-on, step-by-step learning across Microsoft and cloud platforms.

Label

- Azure Data Factory Interview Question & Answers

- Azure Data Factory Tutorial Step by Step

- C# Scripts

- DWH INTERVIEW QUESTIONS

- Google Cloud SQL Tutorial

- Kusto Query Language (KQL) Tutorial

- MS Dynamics AX 2012 R2 Video Tutorial

- MariaDB Admin & Dev Tutorial

- MySQL / MariaDB Developer Tutorial Beginner to Advance

- MySQL DBA Tutorial Beginner to Advance

- PySpark Tutorial for Beginners and Advanced Users

- SQL SERVER DBA INTERVIEW QUESTIONS

- SQL SERVER DBA Video Tutorial

- SQL Server / TSQL Tutorial

- SQL Server 2016

- SQL Server High Availability on Azure Tutorial

- SQL Server Scripts

- SQL Server on Linux Tutorial

- SSIS INTERVIEW QUESTIONS

- SSIS Video Tutorial

- SSRS INTERVIEW QUESTIONS

- SSRS Video Tutorial

- TSQL INTERVIEW QUESTIONS

- Team Foundation Server 2013 Video Tutorial

- Team Foundation Server 2015 Video Tutorial

- Windows 10

- Windows Server 2012 R2 Installation Videos

How to Use Switch Activity in Azure Data Factory - Azure Data Factory Tutorial 2021

How to use Private Endpoint for Azure SQL Database from Azure Data Factory - ADF Tutorial 2021

Issue: How to use Private Endpoint for Azure SQL Database from Azure Data Factory - ADF Tutorial 2021.

Fig-1: Create a new Linked service for Private Endpoint Connection.

Video Demo: How to use Private Endpoint for Azure SQL Database from Azure Data Factory

How to Manage the Size of the SSISDB (Catalog Database) SSIS Tutorial 2021

Issue: How to Manage the Size of the SSISDB (Catalog Database) SSIS Tutorial.

In this article, we are going to learn how to manage the size of the SSISDB (Catalog Database), as you guys know that we are deploying our packages to SSISDC nowadays, we are not deploying the packages to the file system anymore, so we have to take care of this database, because a lot of logins is created so there is so many information when we enable this SSISDB logs, so on each and every execution there are logs produced, that's why the database can grow huge, so we have to reduce the database by following some steps, first of all, we have to see the status of our some of the properties by following this query as shown in the picture below.

As you can see in the above picture that we used a query and the results are Operation_Cleanuo_enabled it is set to True, Retention_Window it is set to 365, Version_Cleanup_Enabled it is set to True, so that means I have already a maintenance job, let's create a maintenance job, we can create this job by using a query and also we can do by using Graphic user interface, I will explain the both first of all we are doing this by using the graphic user interface, right-click on the SSISDB under the Integration Services Catalog, then go to the properties, inside the properties click on the clean log periodically under the Operation log tab, then set it to ''YES'', then click on retention period tab and set it to ''90'' that means we want to keep the log file only for 90 days or you can select as per you requirement, then click on Maximum number of version per project and set it to ''5'' then click on periodically delete old versions under the project versions tab and set it to ''True'', and then click ok as shown in the picture below.

Fig-2: Maintenance job for SQL Server Integration Services Database.

The second method for creating the maintenance job is by query, you can create the job by using the below query.

EXEC catalog.configure_catalog VERSION_CLEANUP_ENABLED, TRUE

EXEC catalog.configure_catalog OPREATION_CLEANUP_ENABLED, TRUE

EXEC catalog.configure_catalog RETENTION_WINDOW, 90

Video Demo: How to Manage the Size of the SSISDB (Catalog Database) SSIS Tutorial 2021

How to Prevent Creation of Empty Files through Azure Data Factory ADF Tutorial 2021

Issue: How to prevent the creation of empty files through Azure Data Factory.

In this article, we are going to learn how to prevent the creation of empty files through Azure Data Factory, let's start our demonstration, go to the Azure data factory and click on the author and then click on Pipelines and select new pipeline, then name the pipeline and drag the copy data activity from activities tab, click on the copy data activity and click on the source, here we have to create and new source data set, to create new source data set click on + New button then select Azure SQL Database as connection and then click on continue, it will move to another window where we have to create a new linked service so click on +New button and then Name the linked service, select the Azure subscription, Authentication type, user name & Password, test the connection and then click on create, as shown in the picture below.

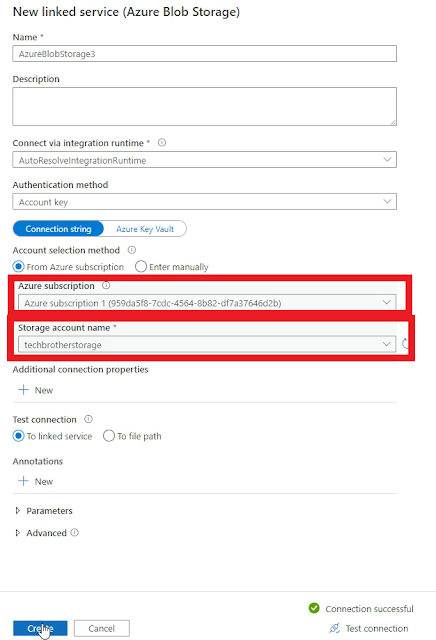

Now as we are done with our Source data set let's click on the sink tab and create a sink dataset where we write the data, click on the + New button then select Azure Blob Storage, and click on continue, select the delimited text which is CSV file, then click on continue, now the next window will ask for the linked service, so we have to create a new linked service which will connect with the output folder where we want to write the data, click on the + New button and then name the linked service, select the Azure subscription, Storage account name, test the connection and click on create. as shown in the picture below.

Once our linked service is created select the input folder as the sink folder where our data will be written, select the first row as header, then select none for import schema and click ok.

Once our sink dataset is created, just click on Debug, once debug process will be completed, go to your input folder, and your desired file will be there, now go back to our SSMS and delete the records from our file, and again click on debug, and this time it will overwrite and make the file empty, so in this scenario what we have to do we have to write logic and make a condition if there is no data in the file it will be notified and should not create any file.

Now go to the azure data factory and search and drag lookup, from the activities tab, click on lookup activity and go to the settings tab and create a new source dataset, select the Azure SQL Database as connection, click on continue and it will ask for the linked service, select the linked service which we have created already, select the table which data coming from, select none as import schema, and click ok, then checkmark the ''First row only'' box and then go to the activities tab and drag the if condition activity and place between the Lookup activity & Copy data activity, then click on If condition activity and go to the activities, and click on add dynamic content, then select the Contains function and then inside the contains function to select the lookup and then insert the first row, as shown in the picture below.

Once our If Condition activity is configured right click on copy data activity and Select cut, then click on True in If Condition Activity and open it and paste the copy data activity there, and then back to the main window. and Click on Debug, once debug process is completed, let's take a look at the output folder of lookup activity, as shown in the picture below there is no file or data copied.

Fig-4: Debug completion report

Now let's go to SQL Server Management Studio and insert some records in our table, once we insert the records go back to our Azure data factory and click on ''debug'', once our debug process is completed, this time it will read the data from the source and write it in the input folder because our file has the records, as you can see in the picture below.

Video Demo: How to Prevent Creation of Empty File through Azure Data Factory

What are Linked Services and DataSets in Azure Data Factory - ADF Tutorial 2021

Issue: What are Linked Services and DataSets in Azure Data Factory - ADF Tutorial 2021

In this article, we are going to learn What is Linked Services and DataSets in the Azure data factory, the Linked services are much like connection strings, which define the connection information needed for the service to connect to external resources. and the datasets represent the structure of the data within the linked data stores, and the linked service defines the connection to the data source.

Let's start our demonstration, open your Azure portal, and first of all, we need to create an Azure data factory, so in the Azure portal, we have a services tab on the top from where we can go to the Data factories, once we click on that it will open a new page click on Create data factory then Select the subscription, Resource group, Region, Name, and Version, then click on Review + Create and then click create. as shown in the picture below.

Once the Data factory is created open your Azure Data Factory Studio, once you ADF Studio opened let's make a Linked service, we can create a linked service by using pipeline, and also we can create linked service separately in the manage tab, let's create our linked service from the manage tab, go to the Manage tab and then in the connection tab we have linked services, click on +New then Select Azure SQL database as our connection, and then click on continue, then we will move to the new page which is New linked service, give the name as you can identify your linked service, in my case I gave it ''lnkazuresqltechbrothersdb'' then select the Azure subscription, select the database, Authentication type, username & password, Test the connection and click on create. as shown in the picture below.

Once we created the above-linked service, let's create a new database and then create a new linked service to the newly created database, go to the SQL Server Management Studio, and create a new database, in my case I have created a database named ''Sales'', and also create a table, then go to Azure Data factory Studio and create a new linked service, Click on + New, then select Azure SQL Database, then click continue, then name the database, in my case, it is ''lnksalesdb'', then select the Azure subscription, Authentication type, Username & password, test the connection and click on create, as shown in the picture below.

Now we have two linked services, one is ''lnkazuresqltechbrothersdb'' and second is ''lnksalesdb'', so we can read the data from one and write to another one, now go to your blob storage in the Azure portal and click on the containers tab under the data storage, and create two new containers, one for input and another one for output, once we created the containers, go back to the Azure data factory studio, and create a new linked service, to connect the techbrotheritstorage where our containers are located, Click on +New, then select the Azure Blob Storage as connection then click continue, and then name the linked service in my case it is ''lnktechbrotherstorage'', then select the Azure subscription then storage account and then test the connection and click create. as shown in the picture below.

Fig-4: Create a new linked service.

Now we have three linked services, and now we have to create pipelines & use them in our datasets, go to the author in Azure Data Factory Studio, and click on pipelines, then click on the new pipeline, then click on the copy data activity and drag it in the working window, click on the copy data activity and then click on the source, as you click on the source it will ask for the source dataset, from where we read the data, as we don't have any dataset so click on + New button to create a new dataset, once we click on New, it will ask for the data source, select the Azure SQL database as our data source, then name the dataset and select the linked service which we have created before, and then it will ask for the table, select the table which we have created before, then select import schema and then click ok.

As we have successfully created the dataset for read the data, now click on Sink and here we have to create a new dataset for write the data, so click on the + New button then select the connection source Azure SQL database, then click continue and then name the dataset, then select the linked service which we have created before, then table name and click ok, as shown in the picture below.

Once we have successfully created our datasets, now go to the main window and click on ''debug'', once the debug process will be completed it will show the results, and then you can go to your output folder and find the data files there.

Video Demo: What are Linked Services and DataSets in Azure Data Factory

How to Load JSON File to Azure SQL Database Table in Azure Data Factory ADF Tutorial 2021

Issue: How to Load JSON File to Azure SQL Database Table in Azure Data Factory.

Video Demo: How to Load JSON File to Azure SQL Database Table in Azure Data Factory

How to get String between two Underscores in SSIS Expressions - SSIS Real Time Example

Issue: How to get String between two Underscores in SSIS Expressions - SSIS Real Time Example

In this article, we are going to learn how to get string between two underscores in SSIS expressions - SSIS real-time example, let's start our demonstration, we are going to get a name of a file that's between two underscores, that's going to lead us another scenario how to use these files in the same foreach loop and loading different data flows, that's a very common scenario we often getting a mix of the file and we need to load them into different data flows, let's open the Visual Studio, in my case I have Visual Studio 2017, go to the SSIS Toolbox at the left side of the dashboard, and search for foreach loop container and bring it into the working window and inside the foreach loop we are going to point where our files are, double click on the foreach loop container and go to the collection, inside the collection we have to select Foreach file Enumerator, then brows for the folder and select your file folder, then in the Files: tab we need only .txt files, and then select Name & Extention, as shown in the picture below.

Then go to the Variable Mappings, click on the variable and name the variable in my case it is ''FullFileName'', and then click ok, as shown in the picture below.

Once we are done with our foreach loop configuration, go to the SSIS Toolbox, and search for the Script Task and bring it into the foreach loop window, then bring the data flow task under the script task, then bring the second data flow task and then bring the third data flow task and connect all the data flow tasks with script task nd rename the data flow task with you file names, as shown in the picture below.

Video Demo: How to get String between two Underscores in SSIS Expressions

How to find out who has deleted Azure Data Factory or other Resources in Azure - Azure Tutorial 2021

Issue: How to find out who has deleted Azure Data Factory or other Resources in Azure.

Video Demo: How to find out who has deleted Azure Data Factory or other Resources in Azure

How to Convert JSON File to CSV File in Azure Data Factory - ADF Tutorial 2021

Issue: How to Convert JSON File to CSV File in Azure Data Factory - ADF Tutorial 2021

In this article, we are going to learn how to convert JSON file to CSV file in the Azure data factory, let's start the demonstration, as we have the .JSON file in our Input container, now we have to convert this .JSON file to .CSV file, first of all, we have to create a folder for our CSV file, let's make a folder, go to the blob storage, then click on container under the data storage tab then click on + Container button, it will open a window at the right side of the dashboard, then name the container, and then click create, in my case I have made a folder named output, s shown in the picture below.

Once we created our destination folder let's go to the Azure data factory and make a new pipeline, to create a pipeline click on Pipeline, then click on New pipeline, name the pipeline and search for copy data activity and drag into the working window, then click on copy data activity and go to the source, as shown in the picture below, and here in the source click on the new source dataset, select azure blob storage and then click on continue, select the JSON file which is our input file, then click on continue, name the file, and create a new link service, click on new then select the Azure subscription and storage account name and test the connection and then click create, then select the input file path, select From connection/store as import schema, and then click ok.

Click on the Sink tab and inside the sink tab we have to create a new Sink dataset, click on the + New button then select Azure blob storage and select delimited text (CSV) which is our output file format, then click on continue, and name the dataset as Output, then select the linked service, which we created earlier, then select the output folder and name the output file, select first row as header, and select ''none'' as import schema, and then click ok, as shown in the picture below.

The next step is mapping, go to the Mapping tab and then click on Import Schemas, inside the schemas you can add or remove columns or change the data format if required, as shown in the picture below.

Fig-4: Configure mappings and import schemas.

Once you are done with your selection click on Debug, and it will start the execution process, when debugging will be completed you will be notified and can see the CSV file in your output folder as shown in the picture below.

Video Demo: How to Convert JSON File to CSV File in Azure Data Factory - ADF Tutorial

How to use Copy Activity to Read Json File & Limitation of Copy Activity - single array is supported ADF Tutorial 2021

Issue: How to use Copy Activity to Read Json File & Limitation of Copy Activity - the single array is supported in Azure data factory.

In this article we are going to learn how to use copy activity to read JSON file & limitation of copy activity - the single array is supported, let's start our demonstration, Open Azure data factory studio, then click on Pipeline and then select New pipeline on the left side of the dashboard, bring the copy activity in the working window, then click on copy activity then go to the source tab and select the +New in source dataset option, then search for azure blood storage and select, then click on continue, then select JSON, then click continue as shown in the picture below.

Fig-1:Create a new pipeline.

Then it will ask for linked service, click + New, it will open a window, select the Azure subscription and then storage account name, and then click on create as shown in the picture below.

Fig-2: Create a new linked service.

In the dataset, properties select the path as input folder, then select from connection/store as import schema and click ok as shown in the picture below.

Fig-3: Create a new source dataset.

Once our source dataset is created go to the sink and create our CSV file, click on + New then select Azure blob storage, then click continue, then select ''delimited text'' which is CSV format, then click on continue, then click ok and select the linked service and provide the output path and click none as Import schema, and then click ok, as shown in the picture below.

Fig-4: Create new dataset & import schema.

Then click on Sink and provide the output path which his ''OutputJson.csv'', then go back to your pipeline and click on the mapping tab, then click on Import Schemas, then click on debug, once you click on debug it will start the execution process and it will take some time to be completed. after completion, you can see your executed file in your output folder, the path we selected for our output file.

Video Demo: How to use Copy Activity to Read Json File & Limitation of Copy Activity

How to Read Json File with Multiple Arrays By using Flatten Activity ADF Tutorial 2021

Issue: How to Read JSON File with Multiple Arrays By using Flatten Activity.

Fig-1: Create a new linked service.

Fig-2: Got an error during the process.