Issue: How to Read JSON File with Multiple Arrays By using Flatten Activity.

In this article we are going to learn How to Read JSON Files with Multiple Arrays By using Flatten Activity in the azure data factory, let's start our demonstration, open your Azure data factory click on Pipeline and then select New pipeline on the left side of the dashboard. name the pipeline and then bring the data flow task in the working window, double click on the data flow then click on data flow debug, it will ask you for the debug time to live, in my case I am selecting 1 hour to debug time to live, and then click ok.

Once we are ok with our debug settings now double click on the data flow and then click on the settings, in the settings tab and then click on the new, once we click on new it will open the editor so we can work on the data flow, click on ''add source'', then go to the source setting in the source setting we have data set option, click on + new button then it will open a new window in this window we have to create new data set, select azure blob storage then click on next, in the blob storage select the format as JSON and then click on continue.

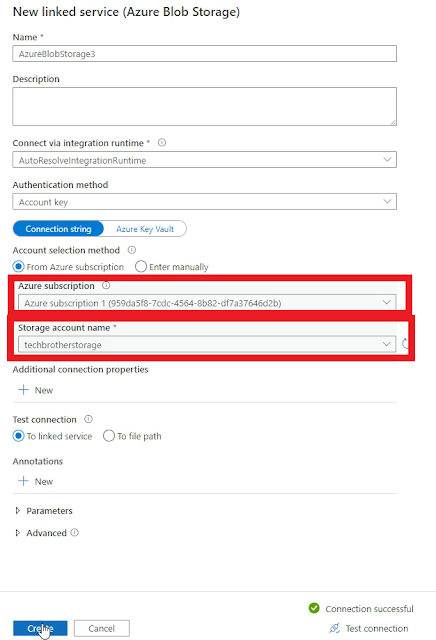

Once you click on continue it will ask for a linked service, so we have to create a new linked service, click on + New then it will open a new window as shown in the picture below, select the Azure subscription and storage account name then click on test connection and finally click on Create.

Fig-1: Create a new linked service.

Fig-2: Got an error during the process.

Solution:

To resolve this error Click on the source option and then go to the JSON settings, inside the JSON settings, select the option ''Array of document'' which is shown in the picture below.

Fig-3: Solution of Error.

Once you select Document form as ''Array of document'' then go to the data preview tab and then click on refresh it will show the data without any error as shown in the picture below.

Fig-4: Successfully preview the data without having any error

As our source 1 is created successfully now click on the + button and bring the flatten activity, click on the flatten activity and it will show the settings tab, inside the settings tab select the options as per your requirement as shown in the picture below.

Fig-5: Create flatten activity.

Once we are done with our 1st flatten activity click on the + sign again and bring another flatten activity, in the second flatten activity select ''social media'' as unroll by: and then add another column named social media link as shown in the picture below.

Fig-6: Create another flatten activity.

Once we are done with our 2nd flatten activity now click on the + sign and bring the sink activity, inside the sink activity we are going to create a new data set, to create a new data click on +New sign then select azure blob storage click continue then select CSV file as file format, then click on continue then select the linked service and choose the output container, then select the first row as header and select none for import schema, and then click ok, now go to the settings tab and select Output to single file as file name option and provide the file name, and then go the optimize and select ''Single partition'' once we are done with our sink activity go back to our pipeline and click on debug, as you can see in the picture below our data flow task is completed successfully without having any error.

No comments:

Post a Comment

Note: Only a member of this blog may post a comment.