Topic: Azure Data Factory 2021 | Azure Data Factory Tutorial For Beginners | Azure Data Factory Tutorial.

Azure Data Factory is Azure's cloud ETL service (Extract, transform, and load) for scale-out serverless data integration and data transformation. It offers a code-free UI for intuitive authoring and single-pane-of-glass monitoring and management. You can also lift and shift existing SQL Server Integration Service packages to Azure and run them with full compatibility in Azure Data Factory.

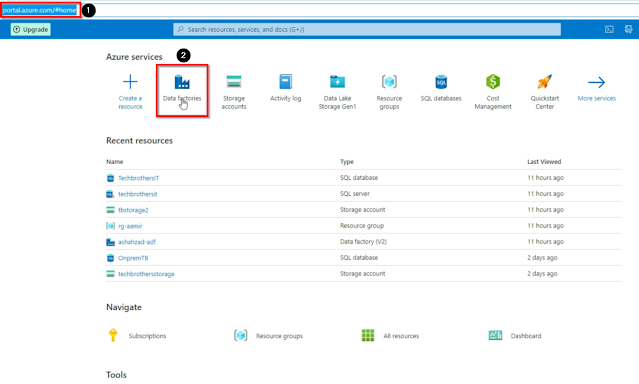

In this article, we are going to learn about the Azure Data Factory and will explain some of its major functions, lets start our demonstration, first of all, log in to your Azure portal, where the Azure Data Factory exists.

Once we are on our Azure portal, here is a Tab named Data factories, click on that to create a new Azure Data Factory.

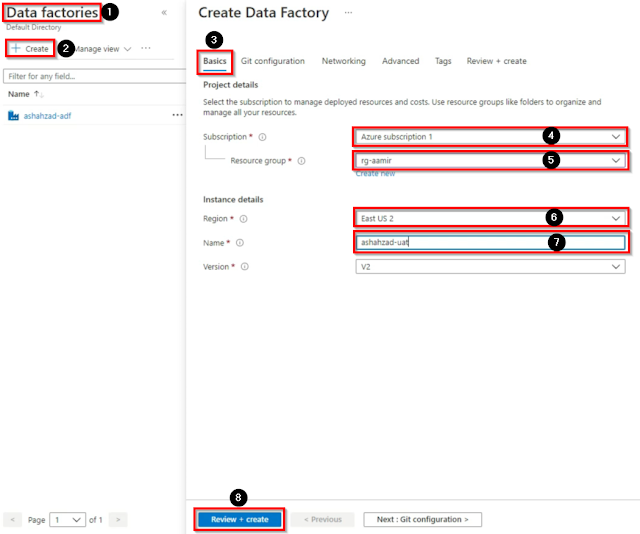

In the data factories, click on the + Create button then, in the basics tab select Azure subscription, then select resource group, then select your region, then give a name to data factory, and then click on the Review + create button then click on Configuration Git later and click on Create.

Once our Azure Data Factory is created, go to the resource and open then Azure data factory studio, in the Azure data factory studio we have four major tabs which are 1. Home, 2. Author, 3. Monitor, 4. Manage, in the Home tab we have many more options i.e, Create pipeline, in the pipeline we can create the ETL activities (Extract, transform, and load) A pipeline is a logical grouping of activities that performs a unit of work. Together, the activities in a pipeline perform a task. For example, a pipeline can contain a group of activities that ingests data from an Azure blob and then runs a Hive query on an HDInsight cluster to partition the data Create Data flow Create Pipeline from the template, Copy data, Configure SSIS integration, Set up code repository.

Next is Copy Data, In Azure Data Factory and Synapse pipelines, you can use the Copy activity to copy data among data stores located on-premises and in the cloud. After you copy the data, you can use other activities to further transform and analyze it. You can also use the Copy activity to publish transformation and analysis results for business intelligence and application consumption.

At last, we have Set up a code repository, Your Azure Repos code repository name. Azure Repos projects contain Git repositories to manage your source code as your project grows. You can create a new repository or use an existing repository that's already in your project.

Then we have the ARM template, again it is a very important tool, in this tool you can export (Backup) your entire Data Factory, or you can Import your Backed up data factory.

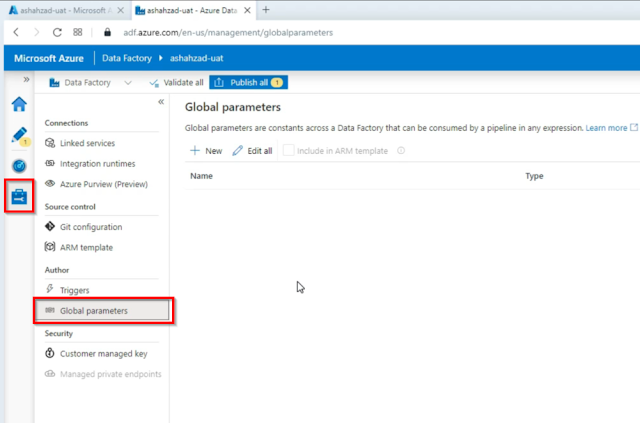

In the Author, we have Triggers, as you know the triggers are the objects that can run your pipelines.

Inside the Security tab, we have the customer-managed key, When you specify a customer-managed key, Data Factory uses both the factory system key and the Customer Managed Key to encrypt customer data. Missing either would result in Deny of Access to data and factory. Azure Key Vault is required to store customer-managed keys.

No comments:

Post a Comment

Note: Only a member of this blog may post a comment.